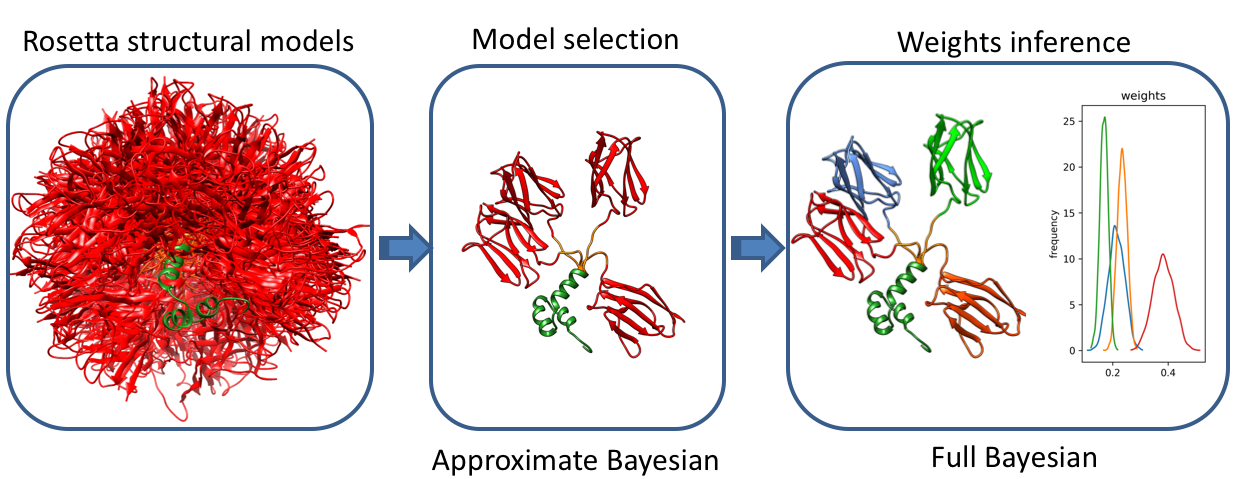

Method overview

A method based on Bayesian statistics that infers conformational ensembles from a structural library generated by all-atom Monte Carlo simulations. The first stage of the method involves a fast model selection approach based on variational Bayesian inference that maximizes the model evidence of the selected ensemble. This is followed by a complete Bayesian inference of population weights in the selected ensemble.

Installation

- Download the latest 64-Bit Python 3.7 Installer from Anaconda and follow instructions.

- Download bioce-1.0.zip file and unzip it:

unzip bioce-1.0.zip - Install dependencies using yml file in directory where yml file is located

cd bioce-1.0 conda env create -f bioce.yml - Activate conda enviroment

source activate bioce - Build and install software (use –user flag if you want to install it for just single user)

python setup.py build - Check if scripts start up

python fullBayesian.py --help python variationalBayesian.py –-helpIf you see no errors but options menu pops up, you are good to go.

Problems with installation on OSX 10.14 (Mojave)

There is a known issue with xcode installation on OSX 10.14 (Mojave) If you see the following error:

test.c:8:10: fatal error: stdio.h: No such file or directory

#include <stdio.h>

^~~~~~~~~

compilation terminated.

please try the installation of the following package

open /Library/Developer/CommandLineTools/Packages/macOS_SDK_headers_for_macOS_10.14.pkg

There may also be issues on with running variationalBayesian.py. The solution to this may be compiling the module manually. This would require running compile.sh file (you may need to change permission to this file).

https://github.com/Andre-lab/bioce/blob/master/compile.sh

I am aware this is not optimal solution and proper fix will hopefully come soon

Running examples

Generating input data

The above example assumed that all input data are in the right format. This may however not be the case when you start from a set of PDB models We assume here that you have generated set of structural models and you have experimental scattering on NMR chemical shifts data available.\ You can refer to The typical workflow may look as follows.

- Run script to generate scattering curves

This requires FoXS to be installed and avaialable through PATH.

Since you have already installed anaconda on your machine,

the easiest way to obtain FoXS is to follow installation guide for IMP (which FoXS is part of)

at: IMP installation

Once FoXS is installed, simply run:

prepareBayesian.py -s strcuture_lib_dir -e experimental_dataApart from SimulatedIntensities.txt, which contains tabularized intensities for each model, a file with starting weights and a list of files is generated. These files are needed to run Bayesian inference in the next step.

- Run variational Bayesian inference

Once you prepare input files as described in step 1, you can run model selection using variational Bayesian inference:

python ../variationalBayesian.py -s SimulatedIntensities.txt -e simulated.dat -f structures.txt -p weights.txt -w 0.01 -o outputwhere w is the weight therhold used for prunning models in after each iteration

- Run complete Bayesian inference

Following model selection with Variational Bayesian one can infer population weights for the subset of models inferre with VBI.

This can be done by simply running:

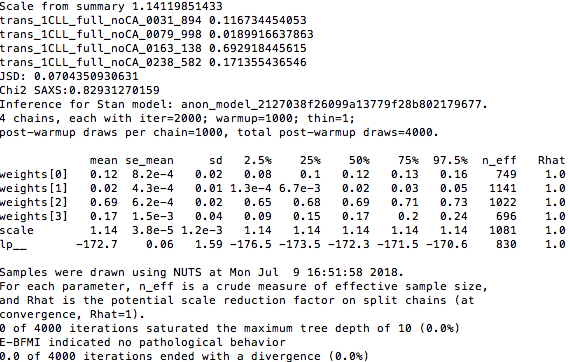

python ../fullBayesian.py -p weights.txt -s SimulatedIntensities.txt -e simulated.dat -f structures.txtOutput

- You should get simillar output to the one bellow:

-

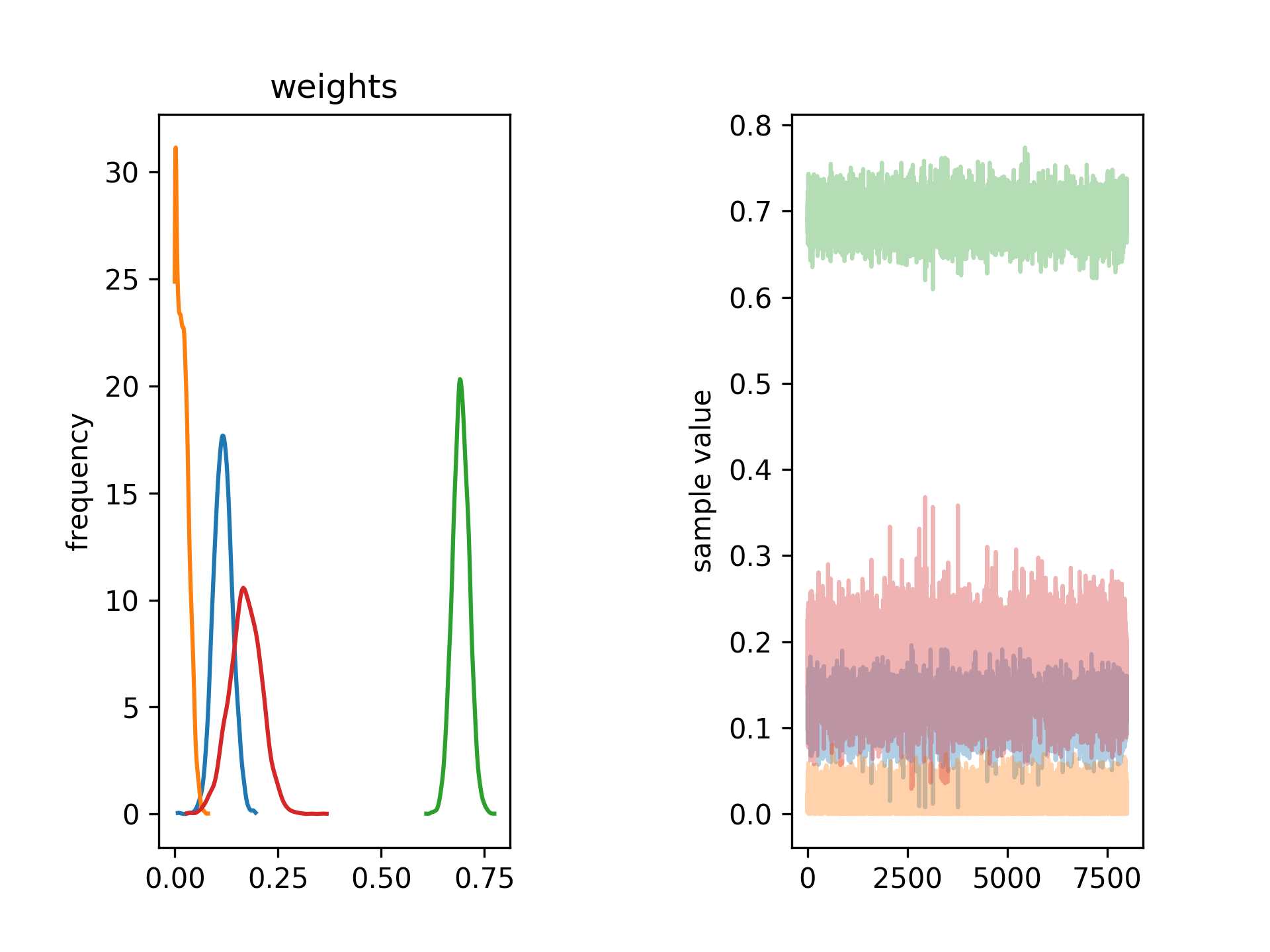

Script also produces two images stan_weights.png and stan_scale.png, which graphically ilustrated distribution of population weights (shown below) and scaling parameter

- Script also returns text file containing Q vector, experimental intensity, model intensity and experimental error.

Using chemical shift data

- In order to use chemical shift data, one needs to install SHIFTX2. This can be done by following instructions at:

SHIFTX2. This requires running python 2.6 or later, which won’t work with

conda bioce enviroment. You will need to deactivate envioremnt before proceeding:

source deactivateOnce shiftx2 is installed, cd to directory with pdbs and run:

python shiftx2.py -b '*.pdb' -f csvThis will run batch job to process all pds and generate file in csv format. Please refer to shiftx2 manual for further options

- Run a script that converts shiftx2 ouptut to input files for Bayesian inference

prepareChemicalShifts.py -s strcuture_lib_dir -e experimental_dataThis will generate a few files: cs.dat, cs.err with simulated chemical shifts and errors from PDB structures and cs_exp.dat, which contains experimental data in the aproprinate format.

Using structural energies

Up to now any method could be used to a pool structural models and we assumed that all models are equaly probable (by assaigining equal weights to all of them). However one can also use information about energy evaluated for each structural models. We use Rosetta to generate structural library and each of the models comes with energy value. In order to make use of them, one needs to simply save them in the text file and tell scripts to use them by suppling “-P” instead of “-p” flag, e.g.

python ../variationalBayesian.py -P energies.txt -s TrmSimulatedIntensities5models.dat -e synthetic_60p.dat -f names5models.txt -w 0.01

Analyzing time-resolved data set

In order to analyze data from time-resolved SAS experimenent where multiple data sets are available one should use fullBayesianTR.py script. It requiers the same input files and parameters as a single file version, however now experimental file is the list of files eperimental files (experimental_list) In addition the script also reads in errors of the simulated scattering pattern givem for each q value. And in order to run script iteratively one can specify iteration with ‘-I’ parameter, e.g.

python ../fullBayesianTR.py -p weights.txt -s SimulatedInitensities.txt -E SimulatedErrors.txt -e experimental_list -o batch_results -f file_list -i 2000 -j 4 -c 4 -I 0

The fullBayesianTR.py script produces iteration_* folder, which contains necessary python files. In order to perform Gaussian Processing regression to smooth weights one needs to run:

python ../gpSmoothing iteration_0/batch_results

gpSmoothing script will produce a set of weights for each specie and save them to prior_matrix.txt file. This file can be used as a input for next iterations

Webserver

Webserver coming up soon!